regularization machine learning l1 l2

Logistic Regression basic. Regularization concept is explained in simple way and detailed discussion on L1 L2 Regularization used in Linear Regression.

How To Use Weight Decay To Reduce Overfitting Of Neural Network In Keras

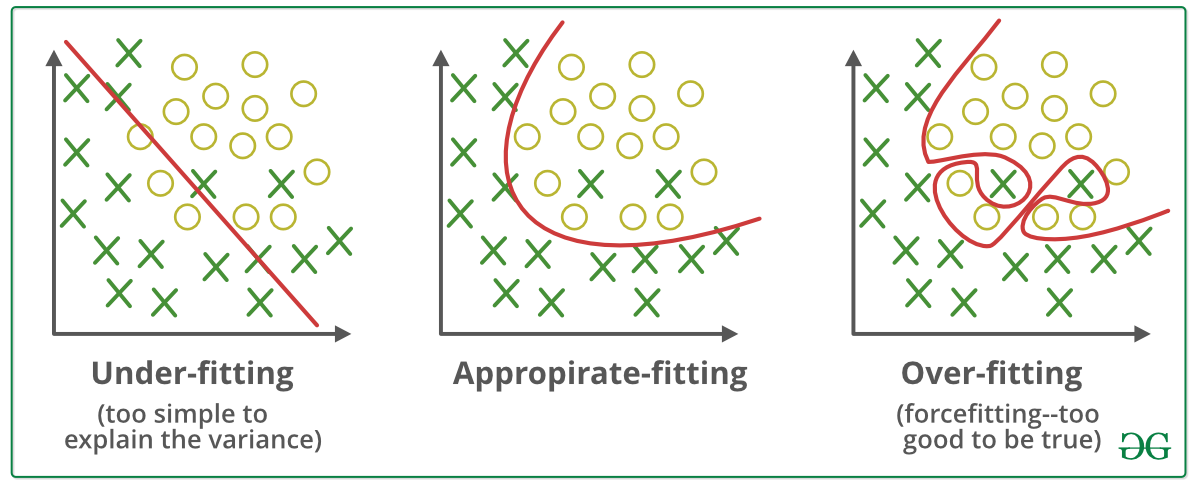

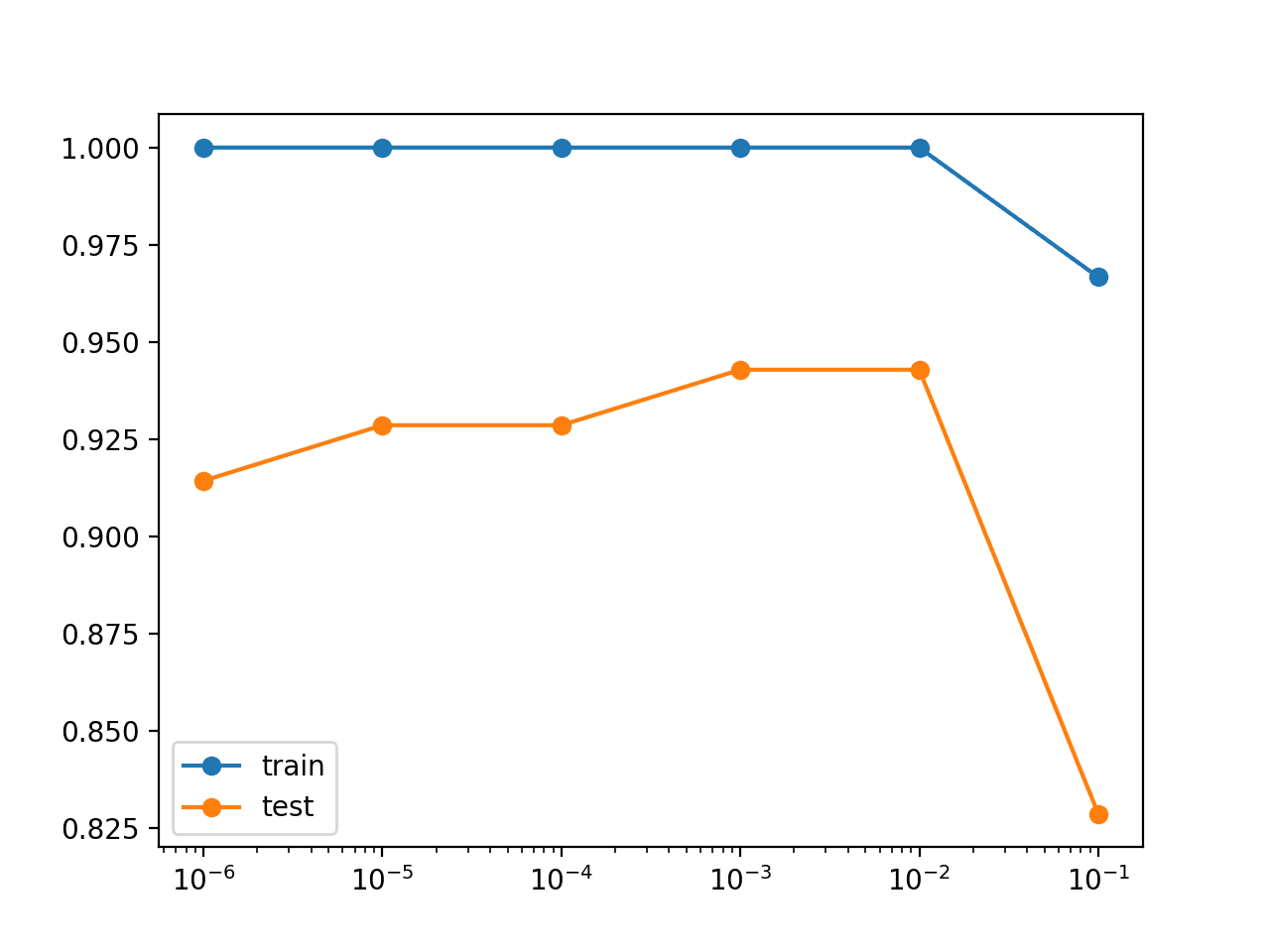

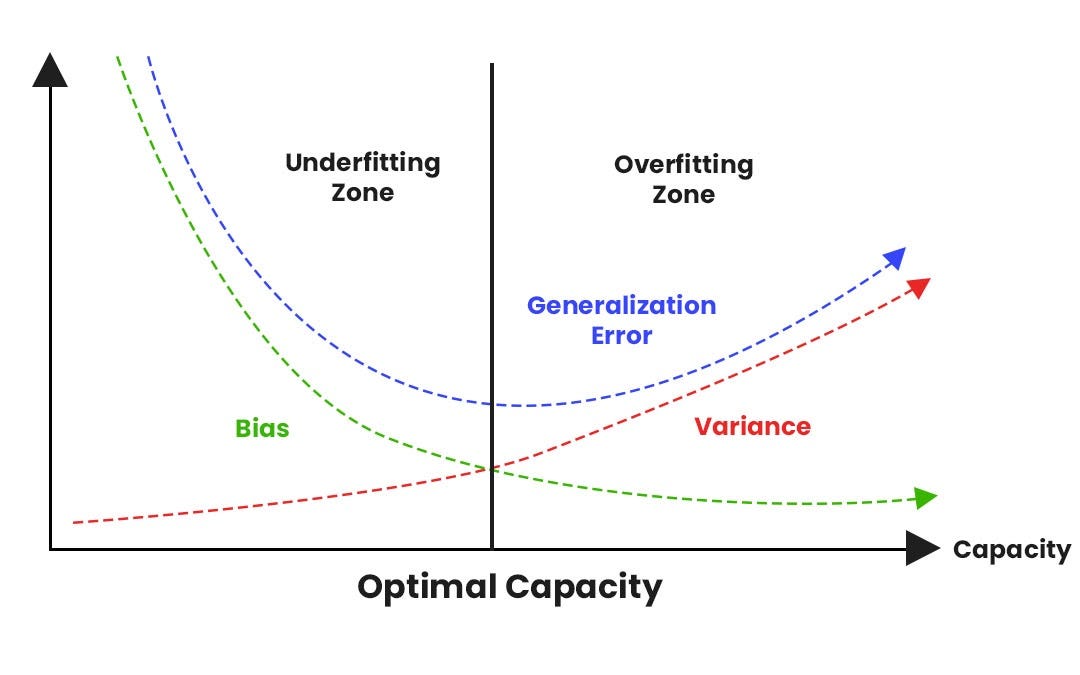

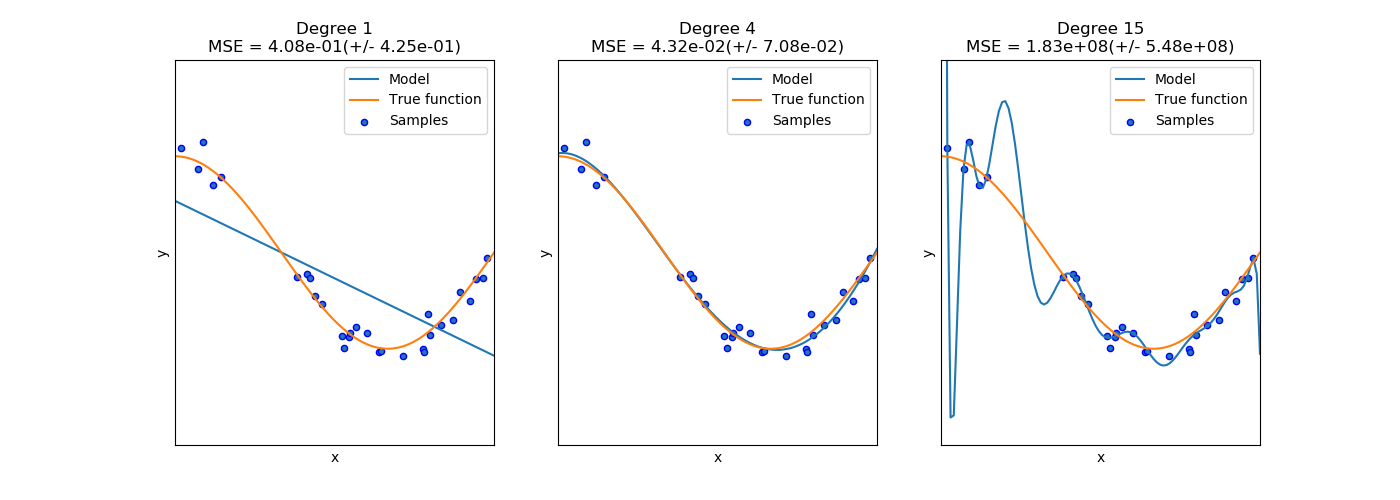

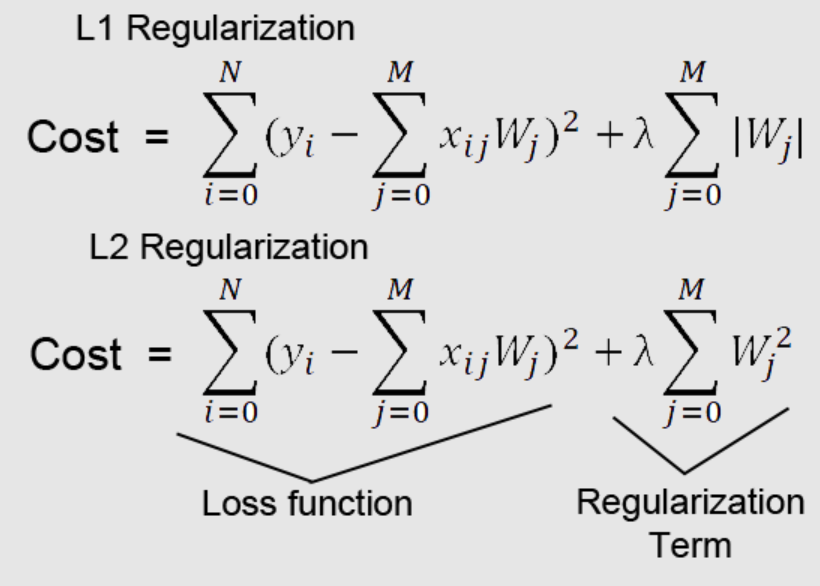

We usually know that L1 and L2 regularization can prevent overfitting when learning them.

. Search L1 support jobs in Los Angeles CA with company ratings salaries. S parsity in this context refers to the fact that some parameters have an optimal. Following is a list of equations we will need for an implementation of logistic regression.

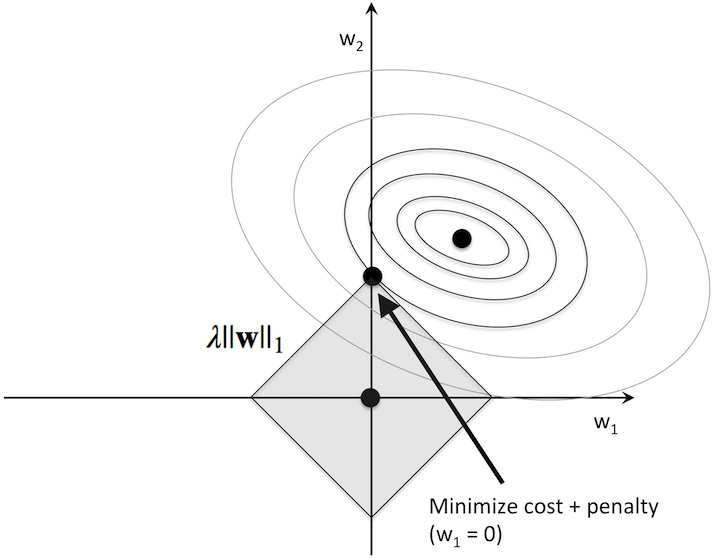

They are in matrix form. In L1 regularization we shrink the weights using the absolute values of the weight coefficients the weight vector ww. Machine Learning L1 Regularization LoginAsk is here to help you access Machine Learning L1 Regularization quickly and handle each specific case you encounter.

LoginAsk is here to help you access Svm Regularization Parameter quickly and. What is ell_1 regularization. L1 and L2 Regularization.

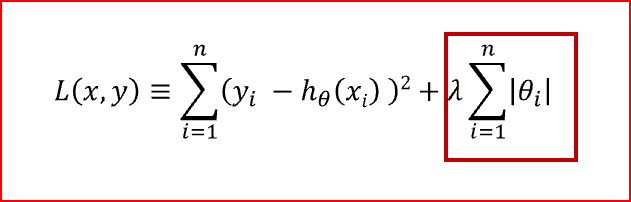

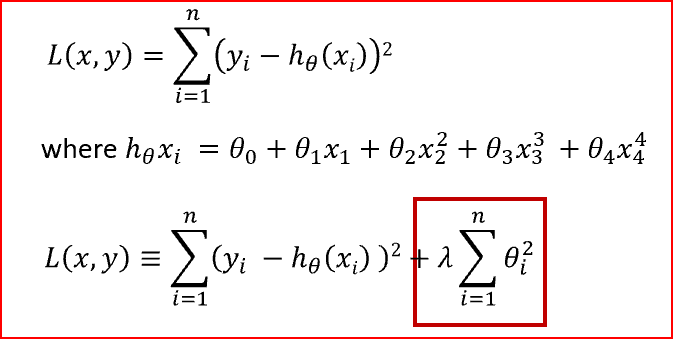

The L1 regularization also called Lasso The L2 regularization also called Ridge The L1L2 regularization also called Elastic net You can find the R code for regularization at. Regularization Loss Function Penalty. A regression model that uses L1 regularization technique.

Technically regularization avoids overfitting by adding a penalty to the models loss function. Svm Regularization Parameter will sometimes glitch and take you a long time to try different solutions. Regularization is a very important technique in machine learning to prevent overfitting.

In ell_1 regularization the sum of the. Machine Learning by. 33 open jobs for L1 support in Los Angeles.

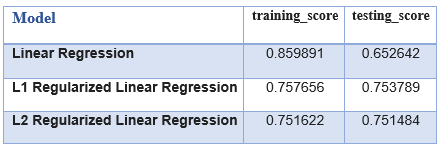

The regression model which uses L1 regularization is called Lasso Regression and model which uses L2 is known as. There are three commonly used. Furthermore you can find.

In comparison to L2 regularization L1 regularization results in a solution that is more sparse. Mathematically speaking it adds a regularization term in order to prevent the coefficients to fit. L1 and L2 Regularization Methods.

The goal of ell_1 regularization is to encourage the network to make use of small weights. Be sure to read the notes. L 1 and L2 regularization are both essential topics in machine learning.

λλ is the regularization parameter to be optimized. L1 and L2 production support troubleshoot user issues and offer. L1 regularization and L2 regularization are two closely related techniques that can be used by machine learning ML training algorithms to reduce model overfitting.

Machine Learning encompasses the study of algorithms that learn from data. It has been a key component in a number of problem domains including computer vision natural language. L2 Regularization A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge.

Equations for logistic regression.

L1 And L2 Regularization Lasso And Ridge Regression Machine Learning Youtube

What Is The Use Of Regularization Explain L1 And L2 Regularization Machine Learning Interview Youtube

Regularization Understanding L1 And L2 Regularization For Deep Learning By Ujwal Tewari Analytics Vidhya Medium

L1 And L2 Regularization Methods Explained Built In

L1 And L2 Regularization Ds Ml Course

Regularization In Machine Learning By Deepak Khandelwal Level Up Coding

Introduction To Regularization Nick Ryan

Machine Learning Tutorial Python 17 L1 And L2 Regularization Lasso Ridge Regression Youtube

How Do We Regularize Generalized Linear Models

L1 And L2 Regularization In This Article We Will Understand Why By Renu Khandelwal Datadriveninvestor

Regularization Time To Penalize

A Better Visualization Of L1 And L2 Regularization By Likkhian Yeo Analytics Vidhya Medium

Regularization In Machine Learning Concepts Examples Data Analytics

Quickly Master L1 Vs L2 Regularization Ml Interview Q A

Ml Implementing L1 And L2 Regularization Using Sklearn Geeksforgeeks

Fighting Overfitting With L1 Or L2 Regularization Which One Is Better Neptune Ai

A Simple Explanation Of Regularization In Machine Learning Nintyzeros